MNIST is the set of data for training the machine to learn handwritten numeral images, which is the most popular and appropriate subject for the purpose of entering deep learning.

Through this posting, piece of codes with explanation will be provided and full codes are upload on the following links;

Also, this post is written with reference to the following sources;

- Tensorflow 2 quickstart for experts

- PyTorch > Tutorials > Neural Networks

- FastCampus > DeepLearningLecture

Tensorflow version

1. Exploratory Data Analysis (EDA)

In order to train a deep learning model, the first thing to do is to explore and analyze the given dataset. In this stage, we can get some hints for designing a structure of the model. The things we have to check in EDA are following;

First we need to check size and shape of feature data and target data. Based on the result, we can confirm that a proper shape of input & output data for our model; Input data shape is 28 by 28 matrix (or tensor) and output is a scalar.

Second, we we have to check the value of target data. Since the target data is discrete, we can confirm that the model will be a classification model, and we will refer target as label from now on. Also, we need to check whether the distribution of labels in train and test dataset is biased or not. If dataset is biased, the model trained with this dataset will also biased. This is one of the main reason why we need to check and analyze the given dataset before build and train the model.

.png)

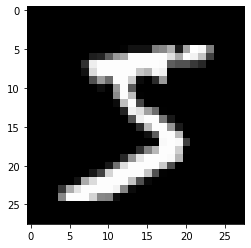

(Optional) Check the real image of feature data. Checking how the feature data looks like is not necessary in training and evaluating the model. However, this will allows you intuitive understanding for analyzing dataset and designing a structure of the model.

2. Data preprocess

First, the given dataset need to be reshaped properly. In this step, feature data need an additional dimension to use convolution layers. Also, label data need to be reshaped label encoding into one-hot-encoding for a classification model [1]. Second, feature data have to be normalized [2]. Through this process, all features data are scaled to a same unit preventing from one feature dominate others. Lastly, we will use batch to control stability of the training [3]. Also, data distribution of each batches might occur bias, so train data need to be shuffled before split into batches. It is worth noting at this step that Tensorflow provides a nice feature called tf.Data to help organize the given data.

3. Model

The model is comprised of two parts, feature extraction and classification. In the feature extraction stage, the model use two dimension convolution filter (Conv2D) since the input feature data is image data. Addition to Conv2D, rectified linear unit (ReLU), pooling and dropout unit [4] are used. In classification stage, a fully connected network (FCN) is used. Also, softmax function is attached at the end of the FCN which give us probabilities for each class label.

(Optional) Visualization of the model’s layers

Conv2D

.png)

.png)

Activation

.png)

Pooling

.png)

Fully Connected

4. Graph

Training a model requires proper loss object and optimizer. Also, we should set up graphs that can execute train step and test step using loss object and optimizer. In train step, we deploy the loss object to calculate the losses and optimizer to adjust weights variables. In test step, we implement loss object to clalulate the performance of the model and no optimizer.

5. Train & Evaluate

We can train the model and check the performance using the dataset and graphs prepared in the previous process.

PyTorch version

1. EDA

EDA is skipped because it is similar to the process performed using Tensorflow.

2. Data preprocess

3. Model

(Optional) Explanation of the model’s layers

Conv2d

.png)

Pooling

.png)

Fully Connected

4. Train & Evaluate

References

[1] A. J. SpiderCloud Wireless, “Why using one-hot encoding for training classifier,” LinkedIn. [Online]. Available: https://www.linkedin.com/pulse/why-using-one-hot-encoding-classifier-training-adwin-jahn. [Accessed: 26-Oct-2019].

[2] U. Jaitley, “Why Data Normalization is necessary for Machine Learning models,” Medium, 09-Apr-2019. [Online]. Available: https://medium.com/@urvashilluniya/why-data-normalization-is-necessary-for-machine-learning-models-681b65a05029. [Accessed: 26-Oct-2019].

[3] J. Brownlee, “How to Control the Stability of Training Neural Networks With the Batch Size,” Machine Learning Mastery, 03-Oct-2019. [Online]. Available: https://machinelearningmastery.com/how-to-control-the-speed-and-stability-of-training-neural-networks-with-gradient-descent-batch-size/. [Accessed: 26-Oct-2019].

[4] Hinton, G., Srivastava, N., Krizhevsky, A., Sutskever, I. and Salakhutdinov, R. (2019). Improving neural networks by preventing co-adaptation of feature detectors. [online] arXiv.org. Available at: https://arxiv.org/abs/1207.0580 [Accessed 26 Oct. 2019].

[5] A. S. V, “Understanding Activation Functions in Neural Networks,” Medium, 30-Mar-2017. [Online]. Available: https://medium.com/the-theory-of-everything/understanding-activation-functions-in-neural-networks-9491262884e0. [Accessed: 26-Oct-2019].

[6] “Pooling,” Unsupervised Feature Learning and Deep Learning Tutorial. [Online]. Available: http://deeplearning.stanford.edu/tutorial/supervised/Pooling/. [Accessed: 26-Oct-2019].